Why are the letters on the QWERTY keyboard are arranged the way they are? I think about this a lot, especially in light of the many alternate layouts that claim to optimise for some critical dimension of typing speed or ergonomics. This is a shallow dive into what makes a keyboard layout optimal, and what a poorly-considered “more optimal” arrangement could be.

The QWERTY arrangement was popularised by virtue of its use on the Sholes and Gilden typewriter, one of the first commercially successful typewriters. The origin of the actual letter order, though, is less clear. Wikipedia mentions the following, in describing how Christopher Sholes (the Sholes in Sholes and Gilden) developed the letter order for the QWERTY keyboard through trial-and-error:

The study of bigram (letter-pair) frequency by educator Amos Densmore, brother of the financial backer James Densmore, is believed to have influenced the array of letters, but the contribution was later called into question.

It’s also not clear exactly what Sholes was optimising for in this trial-and-error process. I’d assume constructablility of the mechanism and usability for the typist, but it could also have been related to minimising the average number of points in letters in a given row.

Given that the keyboard’s mechanism is no longer a factor in ordering the letters, we can focus on usability for the typist. An early study on typing1 gives us key design features for maximising typing speeds:

- Maximise typing on the home (middle) row

- Maximise alternation between the left and right hands

- Equalise loads on the left and right hands

How can we design a keyboard that optimises for each of the above features, both individually and together?

Assumptions

To answer the question above, we’ll need some data and we’ll need to make some assumptions. Assume we’re typing in (American) English, using a QWERTY keyboard2. When talking about letter frequency, we’re assuming all letters are lowercase, or that ignoring uppercase letters won’t have a significant effect. Assume no effect on letter frequency of using American vs. British English - British English has more U’s and fewer Z’s - as we’re using American English because the data is easier to find. We’ll also ignore the fact that, in spite of the previous assumption, this post is written in British English.

For criteria 1 and 3, we need to know the frequency of letters. For criterion 2, we’ll need to know how likely each letter is to follow any other given letter. We’ll start there.

Alternating Hands

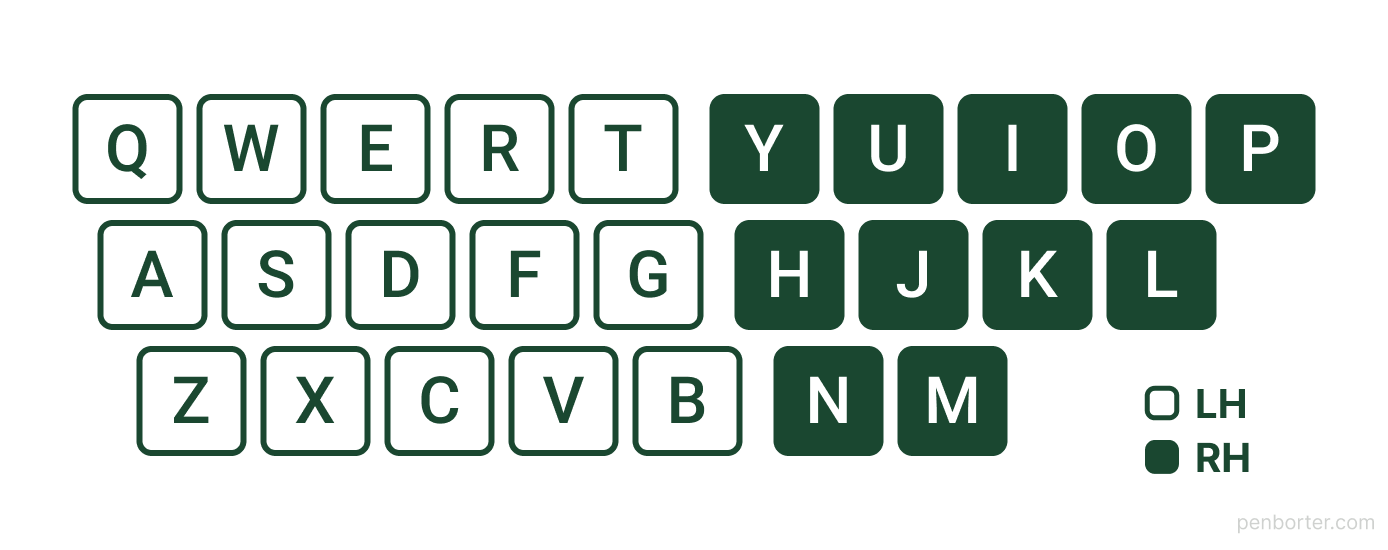

Each letter on a keyboard is assigned to a particular hand (assuming you’re touch typing optimally3). Important to note that on a QWERTY keyboard the left and right hands are assigned different amounts of letters (15 and 11, respectively) because of the symbols at the far right of the keyboard. That’s another assupmtion / restriction (for now): The locations of symbols and numebers will remain the same as the QWERTY keyboard, meaning that the number of letters assigned to each hand will also be the same.

Criterion 2 means we need find letters that are normally typed one after the other, and separate them on the keyboard. By finding common pairs of letters we can split them between the left and right hands.

The letters assigned to each hand on the QWERTY keyboard.

Bigrams

Sequences of two letters are called bigrams. Each of the 676 possible bigrams in English has a particular frequency, based on common spelling patterns and word usage. Knowledge of these frequencies, like individual letter frequencies, can be used to breaking cyphers through frequency analysis. They’re also used in language models for speech and lanaguage identification, speech recognition, and text-to-speech.

Peter Norvig, director of research at Google, has published data on English bigram frequencies4, with a sample of 6,670,825,274,245 bigrams. IN is the commonest, appearing a touch more than 2% of the time. JQ is the least common, with a frequency of 0.00004%. Letter order affects frequency: while TH occurs almost 2% of the time in the sample, HT appears about 20x less often, just over 0.1% of the time.

That being said, in our case the order of bigrams is not important. We want to measure the probability of any two letters being typed sequentially, regardless of order. Higher probability pairs need to be separated with a higher priority on our new keyboard so that the letters are alternating hands as often as possible.

Given some keyboard letter arrangement, we can see which bigrams are alternately typed and calculate the total frequency of all of these bigrams. This total frequency divided by the number of instances of all bigrams, is the probability that a given string of two letters will be typed using alternate hands on this layout.

Calculating Alternation

To calculate the probability of alternation, we start with a 26x26 matrix of bigram probabilities, with an entry for every bigram AB for each A and B. Given a subset of letters, we can identify the bigrams that are split across right and left hands; the remaining bigrams are those that can be typed with a single hand. If we add the probabilities of all of the alternating bigrams, we get the probability that any given bigram will be alternately typed. Essentially, we’re counting the occurrences of all alternating bigrams in our data set, and dividing by the total number of occurrences.

We can define a simple Python function, given a set of letters assigned to one hand, which calculates the likelihood that a given bigram will be split up.

def bigramScore(selectLetters):

# All the leftover letters in the alphabet

excludedLetters = np.setdiff1d(the_alphabet, selectLetters)

# Slice of bigramProbs with excluded letters as cols and select letters as rows, sum all values

# Do it again with the rows / cols swapped to get both directions (i.e. P(A|B) and P(B|A))

splitProb = bigramProbs[excludedLetters].loc[bigramProbs.index.isin(selectLetters)].to_numpy().sum()

splitProb += bigramProbs[selectLetters].loc[bigramProbs.index.isin(excludedLetters)].to_numpy().sum()

return splitProb

Existing Keyboards

The QWERTY keyboard separates almost exactly half of all bigrams (50.05%); that is, given any two letters to type you have a 1 in 2 chance of typing them with different hands. That’s a good starting point, but it feels like we’re leaving a bit on the table. August Dvorak developed a keyboard layout (the Dvorak keyboard) ostensibly focusing on hand alternation in the development process, and managed to crank the separation of bigrams all the way up to 67.8%.

Can we do better?

Optimising Alternation

In the time it would have taken me to learn and optimise whatever algorithm is appropriate for this problem, I instead just ran a brute-force check on every selection of letters5 to find the optimal set of letters in terms of bigram splitting.

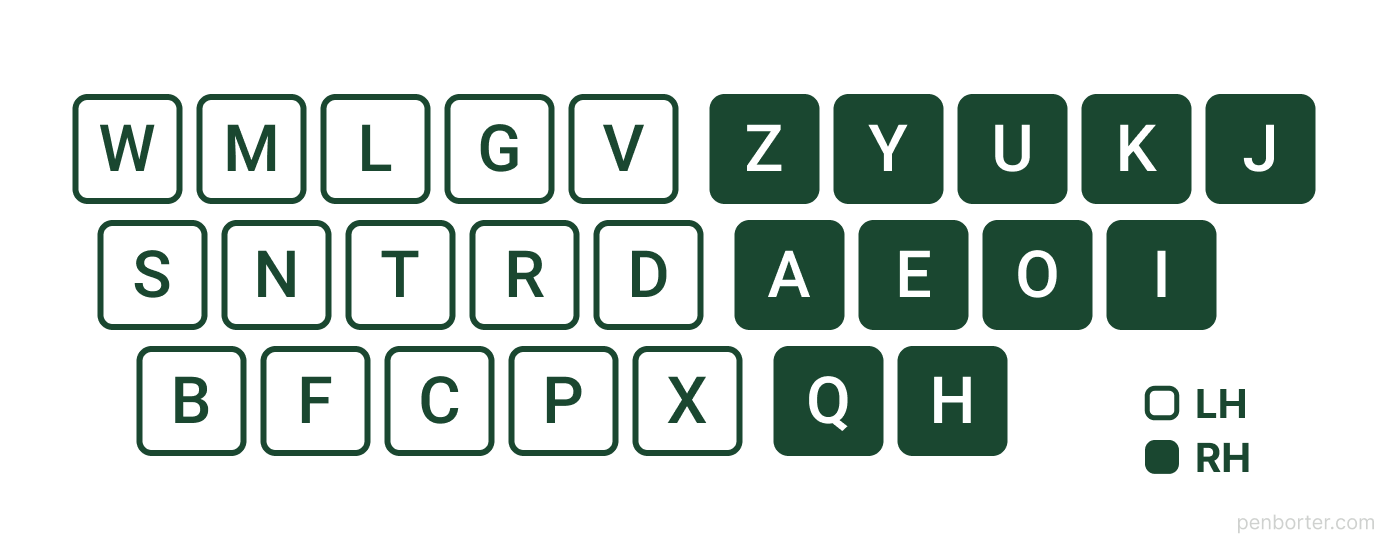

Here is the optimal (bigram alternation-maximising) split of letters between the right and left hand:

- Left: B, C, D, F, G, L, M, N, P, R, S, T, V, W, X

- Right: A, E, H, I, J, K, O, Q, U, Y, Z

If we loosen the assumption about the number of letters assigned to each hand, it turns out more imbalance is better in terms of increasing alternation: Allocating only 10 letters to the right hand results in a 0.1% improvement. This is probably because the vowels and common consonants appear so much more than the other letters, so keeping the as many of the remaining uncommon consonsants off to one side as possible is better. There’s no more improvement assigning only 9 letters or fewer to one hand.

Letter Frequency

Alfred Vail, who was involved in the early development of telegraphy and Morse Code, estimated letter frequency in English by counting the amount of moveable type in the type-cases of a local newspaper. These days, thanks to huge amount of data on the internet (and Peter Norvig specifically), we can now use a larger reference set with trillions of letters, instead of thousands. Even so, the frequencies measured by Vail in the early 19th Century weren’t too far off.

ETAOIN SHRDLU

Traditionally, the order of frequency of the 12 most common letters was given as ETAOIN SHRDLU. Again, this is pretty close to what we can measure given the petabytes of English-language content we have available. The only key difference is swapping out the U at the end with C instead.

The QWERTY keyboard only has 4 of these letters in the home row, and has somehow ended up with 6 in the top row alone.

Given the relative frequency of letters in English, the optimal split of letters between the right and left hands, and criteria 1 and 3 for maximising typing speed (repeated below), we can start to piece together an optimised keyboard.

Criterion 1 tells us that we should assign the most frequent letters to the middle row of the keyboard, and work our way out from there. Criterion 3 tells us that we should work from the centre out, with the commonest letters for each hand under the index fingers.

We can order the set of letters assigned to each hand by their relative frequency, and assign them from the middle of the home row to the edge, then from the middle to the edge of the top and bottom rows.

Finally, with all of this taken into consideration and with very little fanfare, I present the WMLGVZ keyboard:

The WMLGVZ keyboard.

It looks wrong, but it is oh so right. Unfortunately, I think that the time it would take in completely retraining myself to use a new keyboard layout would overwhelm what is probably a minimal amount of efficiency gain in constantly alternating hands and barely having to leave the home row.

A jupyter notebook will be available on Github.

-

Norman and Rummelhart (1983), Studies of typing from the LNR research group. ↩

-

Whether it’s the US or International version doesn’t effect the letter arrangement. ↩

-

I.e. exactly as per the guidebook. It’s worth noting that there are minor difference in what constitutes “optimal” touch typing depending on who you ask. ↩

-

Counts for all 2-letter (lowercase) bigrams from the Google Web Trillion Word Corpus. Some of the counts don’t match those in other places on the web, but I’m rolling with this data set for now. ↩

-

26 choose 11 = 7,726,160 ↩